- Overview

- Package

- Class

- Tree

- Deprecated

- Index

- Help

| Interface | Description |

|---|---|

| ConnectionListener |

This interface allows applications to receive events about the state of DataSource for Java's

connection to other DataSource peers.

|

| DataSource |

This is the main interface of DataSource for Java.

|

| DataSourceConfiguration |

This interface allows public access to values inside a DataSource configuration file.

|

| Peer |

Represents a DataSource peer.

|

| PeerStatusEvent |

Event raised when a peer changes status.

|

| SubjectErrorEvent |

Represents an event raised when there is an error in a subscription to a subject.

|

| SubjectStatusEvent |

Represents an event that is raised when there is a change in the status of a subject.

|

| Class | Description |

|---|---|

| DataSourceFactory |

A factory used to create

DataSource instances. |

| Enum | Description |

|---|---|

| PeerStatus |

Enumeration that defines the states of a DataSource Peer.

|

| SubjectError |

Enumeration that defines the possible types of subject error.

|

| SubjectError.Flags | |

| SubjectStatus |

Enumeration that defines the status values that a subject can have.

|

It is recommended that you read the DataSource Overview to gain an understanding of:

For more information on how to use DataSource for Java, navigate the links in the following table:

DataSource for Java provides the following capabilities

Version 6.1 of the DataSource for Java SDK provides a new API designed to make developing Caplin DataSource applications easier.

DataProvider. Several kinds of

Publisher interface are provided to cover common use cases,

such as broadcasting messages and sending messages on a subscription

request/discard basis.Subscriptions, which allow you

to easily subscribe to incoming data from other DataSource peers. The

BroadcastSubscription allows

you to register as a listener to all incoming messages with subjects that

match a given namespace.Channels, which allow

you to perform bi-directional communication with StreamLink clients. An

example use case for channels might be to implement trade messaging between

a DataSource application and a StreamLink client.Logger

when creating a DataSource and it will be used by the DataSource library. This

allows you to implement logging in the way you choose.The example shown in this document shows how to use the common features of the new API. Specific code examples are provided in the API documentation for the relevant classes and methods.

Three example applications are provided that demonstrate best practice usage of the API. See the example applications section for more details.

You typically use the DataSource API to integrate an external entity that provides data, into a DataSource network. The basic steps for doing this are:

DataProvider

interface, to provide data that your DataSource application can send

to connected DataSource peers. Your implementation would typically

interface with an external entity that supplies the data.

DataSource class

using the DataSourceFactory.

Publisher. The

Publisher publishes messages to other DataSource peers

that are connected to this DataSource, and determines the subscription

management strategy (see

Publishers).

DataProvider with the

Publisher, so that the Publisher has a

source of data that it can publish.

DataSource.

DataSource for Java 6.0 is a layer on top of the existing DataSource 4.4 library. It works by exposing the new DataSource 6.0 API for client applications to use, and internally uses the DataSource 4.4 library for low level functions such as establishing connections and sending data packets.

The DataSource 4.4 API is no longer available for use by client applications.

It is recommended that you upgrade your DataSource applications to use the DataSource 6.0 API if possible. The new API simplifies application development by reducing the amount of peer management required, introduces helpful abstractions such as Publishers and Channels, and simplifies the logging process.

The DataSource 6.0 API is significantly different to the previous API.

The following examples show how some of the common DataSource features can be accessed using the DataSource 6.0 API. The

first example shows how to construct a DataSource instance.

import java.io.File;

import java.io.IOException;

import java.util.logging.Logger;

import org.xml.sax.SAXException;

import com.caplin.datasource.DataSourceFactory;

import com.caplin.datasrc.DataSource;

public class DataSourceConstruction

{

@SuppressWarnings("unused")

public static void main(String[] args) throws IOException, SAXException

{

// old method of creating a DataSource

com.caplin.datasrc.DataSource oldDS = new DataSource(

new File("conf/DataSource.xml"),

new File("conf/Fields.xml"));

// new method of creating a DataSource

com.caplin.datasource.DataSource newDS = DataSourceFactory.createDataSource(

args,

Logger.getAnonymousLogger());

}

}

There are several points to note about this example.

com.caplin.datasource. The

root package for DataSource 4.4 classes and interfaces is com.caplin.datasrc.com.caplin.datasource.DataSource class are obtained from the DataSourceFactory

rather than constructed directly as in DataSource 4.4.DataSource is to pass in array of command line arguments, from which

DataSource for Java can parse the location of configuration files. This is a departure from DataSource 4.4, which took File

arguments defining where the configuration files were located, and frequently led to hard-coding of file locations. For

further details on how command line option parsing works, see DataSourceFactory.createDataSource(String[], Logger).DataSource instances also takes a Logger. This allows

you to have full control of how event logging is implemented. Previously the event logger would be constructed by DataSource for Java

based on the options specified in your DataSource configuration XML file.The following example shows how to listen to connection events from peers.

import java.io.File;

import java.io.IOException;

import java.util.logging.Logger;

import org.xml.sax.SAXException;

import com.caplin.datasource.ConnectionListener;

import com.caplin.datasource.DataSourceFactory;

import com.caplin.datasource.PeerStatusEvent;

import com.caplin.datasrc.DSMessageListener;

import com.caplin.datasrc.DataSource;

public class ConnectionListenerExample

{

public static void main(String[] args) throws IOException, SAXException

{

// old method of adding a connection listener

com.caplin.datasrc.DataSource oldDS = new DataSource(

new File("conf/DataSource.xml"),

new File("conf/Fields.xml"));

oldDS.setDSMessageListener(new DSMessageListener() {

@Override

public void receiveMessage(int peerIndex, int messageType, int peerID, String peerName)

{

// handle connection events

}

});

// new method of adding a connection listener

com.caplin.datasource.DataSource newDS = DataSourceFactory.createDataSource(

args,

Logger.getAnonymousLogger());

newDS.addConnectionListener(new ConnectionListener() {

@Override

public void onPeerStatus(PeerStatusEvent peerStatusEvent)

{

// handle connection events

}

});

}

}

The main improvement here is in naming. The old approach required you to implement a DSMessageListener, which

would receive callbacks containing several confusingly named arguments. In DataSource 6.0 you implement a ConnectionListener

which receives callbacks containing a simple object that provides access to the peer that has changed status and the new status

of the peer.

The following example shows how to listen to requests and discards from peers.

import java.io.File;

import java.io.IOException;

import java.util.logging.Logger;

import org.xml.sax.SAXException;

import com.caplin.datasource.DataSourceFactory;

import com.caplin.datasource.namespace.PrefixNamespace;

import com.caplin.datasource.publisher.DataProvider;

import com.caplin.datasource.publisher.DiscardEvent;

import com.caplin.datasource.publisher.RequestEvent;

import com.caplin.datasrc.DSDiscardListener;

import com.caplin.datasrc.DSRequestListener;

import com.caplin.datasrc.DataSource;

public class RecordExample

{

public static void main(String[] args) throws IOException, SAXException

{

// old method of receiving requests and discards

com.caplin.datasrc.DataSource oldDS = new DataSource(

new File("conf/DataSource.xml"),

new File("conf/Fields.xml"));

class MyVersion44RequestAndDiscardListener implements DSRequestListener, DSDiscardListener

{

@Override

public void receiveDiscard(int peerIndex, String[] subjects)

{

// handle requests

}

@Override

public void receiveRequest(int peerIndex, String[] subjects)

{

// handle discards

}

}

MyVersion44RequestAndDiscardListener myListener = new MyVersion44RequestAndDiscardListener();

oldDS.setDSRequestListener(myListener);

oldDS.setDSDiscardListener(myListener);

// new method of receiving requests and discards

com.caplin.datasource.DataSource newDS = DataSourceFactory.createDataSource(

args,

Logger.getAnonymousLogger());

class MyVersion5RequestAndDiscardListener implements DataProvider

{

public MyVersion5RequestAndDiscardListener(com.caplin.datasource.DataSource newDS)

{

newDS.createActivePublisher(new PrefixNamespace("/FX"), this);

}

@Override

public void onRequest(RequestEvent requestEvent)

{

// handle requests

}

@Override

public void onDiscard(DiscardEvent discardEvent)

{

// handle discards

}

}

new MyVersion5RequestAndDiscardListener(newDS);

}

}

In both cases you are required to implement a class that receives requests and discards from peers. However there are several improvements in DataSource 6.0:

DSRequestListener and a DSDiscardListener. In practice

the same class is almost always responsible for handling both. Therefore in DataSource 6.0 you only need to implement a

DataProvider, which is responsible for handling both requests and discards.DSRequestListener would receive every request, and each instance

of DSDiscardListener would receive every discard. This usually required you to write a switch statement in order

to determine how to handle the request or discard, based on the subject. DataSource 6.0 simplifies this process by allowing

you to register DataProviders for a specific namespace, in this case all subjects that start with /FX.

Requests or discards for subjects that do not match the namespace will not be passed to your DataProvider. If

a requested subject does not match any of the registered DataProviders, DataSource for Java will automatically send a

not found response to the requesting peer.For further details on how to use the DataSource 6.0 API, see the migration example applications included in the DataSource for Java kit.

There are two compatibility issues you should be aware of when migrating to DataSource for Java 6.0.

Versions of DataSource for Java prior to 6.0 required a minimum JVM version of 1.5. Starting from DataSource for Java version 6.0, the minimum JVM version required is 1.6.

The behaviour of the logging subsystem within DataSource for Java 6.0 has been simplified and compatibility with components using the DataSource for C SDK improved. This may mean that you will have to change your DataSource configuration files.

logRotateHours attribute has been removed. If you are using logRotateHours, you will

need to migrate to using the cyclePeriod attribute where the value is specified in minutes.defaultFileName attribute has been removed. The pattern

tag should now be used to configure log file names.If your DataSource configuration XML file contains legacy configuration options that have now been removed, then your DataSource application will fail to start up. If this happens you should compare your DataSource configuration XML file with the new schema and remove any configuration options that are no longer supported.

In addition, it is recommended that you no longer use the logging tag in your DataSource configuration

XML file for event logging; instead, pass an instance of Logger to your

DataSource when you construct it in order to have full control of how event logging is

implemented in your application. If you take this approach then you can remove the logging section from your

DataSource configuration XML file altogether.

The packet log must still be configured using the DataSource configuration XML file.

For further details on logging configuration, refer to the DataSource configuration XML file schema. If your current DataSource configuration XML file uses options that are no longer supported and you need help in order to set up the same logging configuration with the new options, please contact Caplin Support.

DataSource applications are configured using the Caplin DataSource Configuration Format as described in the DataSource For C Configuration Syntax Reference.

The XML configuration format is now deprecated.

(The specification for the XML format can be found here: DataSource for Java XML Configuration (Deprecated))

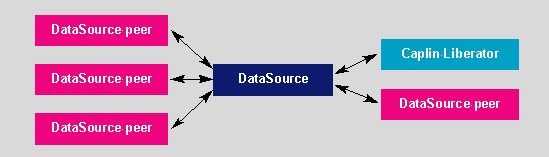

A DataSource application is bi-directional - it can send and

receive data from another DataSource application. In addition, a DataSource

can either connect to, or listen for connections from, another DataSource.

These other DataSource applications are referred to as Peers.

Caplin Liberator and Caplin Transformer are examples of DataSource peers.

DataSource messages are often referred to as "objects" when handled by a DataSource application; Liberator documentation refers to objects in this way. Objects can be requested from individual DataSource peers or groups of peers.

An active DataSource is one that will service requests for objects (the opposite of this is a broadcast DataSource, which simply sends all objects and updates to its peers regardless). It is an active DataSource's responsibility to keep track of which objects have been requested and send updates for those objects only.

When a StreamLink user requests an object, and Caplin Liberator does not already have it, Liberator requests it from one or more of its active DataSource peers. If another user subsequently requests that object, Caplin Liberator will already have all the information it needs, and will respond to the user's request immediately.

Objects may be discarded as well as requested. This tells the DataSource that the sender of the discard request no longer wishes to receive updates for the object. When a user logs out or discards an object, Caplin Liberator sends a discard message to the active DataSource (as long as no other user is subscribed to that object). The object will actually be discarded by the DataSource one minute after the user requested the discard; this prevents objects being requested and discarded from the supplying DataSource unnecessarily.

The API includes several types of Publisher that implement the

Publisher interface. You create an instance of a particular

Publisher by calling a createXXXPublisher() method on the

DataSource instance.

The ActivePublisher passes all

subscription requests from remote DataSource peers to the DataProvider,

but only passes a discard when all of the remote peers have discarded the subject.

The DataProvider implementation is not responsible for keeping

track of the number of requests received; it just needs to stop supplying data

for a subject when it receives the single discard.

The BroadcastPublisher publishes

messages to all connected DataSource peers. The use of a BroadcastPublisher

is not recommended due to the detrimental effects that broadcast data has on

reliable and predictable failover between DataSource components.

The CompatibilityPublisher is

provided for backwards compatibility with DataSource applications that use

versions of DataSource for Java older than 6.0.

This publisher is similar to ActivePublisher except that it

passes a discard to the DataProvider every time a peer discards

a subject, rather than a single discard when the last peer discards the

subject. This requires the DataProvider to do more work, by

keeping count of how many peers are subscribed to each subject and only

unsubscribing from the back end system when the last peer discards the

subject.

A namespace determines which particular subjects can have their data

published by a given Publisher. The rules defining what

subjects are in a particular namespace are determined by implementations of

the Namespace interface. A

Namespace instance is associated with a Publisher

when you call the createXXXPublisher() method on the

DataSource. The Publisher uses

the Namespace to ensure that its associated

DataProvider only receives requests for subjects in a specified

namespace.

You can write your own implementation of Namespace, but the

API provides some ready-made implementations that should be suitable for

most situations - see PrefixNamespace

and RegexNamespace

For example, PrefixNamespace ensures that data is only

published if its subject matches the specified prefix. So, if the prefix

supplied in the constructor for an instance of PrefixNamespace

is " /I/ ", data with the subject " /I/VOD.L " will be published, whereas

data with the subject "/R/VOD.L " will not be published.

This example shows how to create a DataSource instance and create a

broadcast publisher for sending updates.

import java.util.logging.Level;

import java.util.logging.Logger;

import com.caplin.datasource.ConnectionListener;

import com.caplin.datasource.DataSource;

import com.caplin.datasource.DataSourceFactory;

import com.caplin.datasource.PeerStatusEvent;

import com.caplin.datasource.messaging.record.RecordType1Message;

import com.caplin.datasource.namespace.PrefixNamespace;

import com.caplin.datasource.publisher.BroadcastPublisher;

public class OverviewExample implements ConnectionListener

{

private static final Logger logger = Logger.getAnonymousLogger();

public static void main(String[] args)

{

new OverviewExample();

}

public OverviewExample()

{

// Create an argument array. In practice these would probably be the command line arguments.

String[] args = new String[2];

args[0] = "--config-file=conf/DataSource.xml";

args[1] = "--fields-file=conf/Fields.xml";

// Create a Datasource.

DataSource datasource = DataSourceFactory.createDataSource(args, logger);

// Register as a connection listener

datasource.addConnectionListener(this);

// Create a broadcast publisher with a NameSpace that will match everything.

BroadcastPublisher publisher = datasource.createBroadcastPublisher(new PrefixNamespace("/"));

// Now start the DataSource.

datasource.start();

// Updates can be broadcast using the publisher, for example a record:

RecordType1Message record = publisher.getMessageFactory().createRecordType1Message("/BROADCAST/MYRECORD");

record.setField("FieldName", "FieldValue");

publisher.publishInitialMessage(record);

}

@Override

public void onPeerStatus(PeerStatusEvent peerStatusEvent)

{

logger.log(Level.INFO, String.format("Peer status event received: %s", peerStatusEvent));

}

}

One of the best ways to gain an understanding of how to use the DataSource for Java API is to look at some example DataSource applications. The Caplin Integration Suite kit includes three example applications that illustrate best practice when using the DataSource for Java API, plus a migration example that shows how to upgrade an existing DataSource application to use the version 6.0 API.

The example applications use two notional companies to illustrate a typical use case for DataSource for Java:

NovoBank is a bank or financial institution that wishes

to build a trading application using Caplin Products. The NovoBank developers

will be responsible for writing the Integration Adapter using the DataSource

for Java API. The DataSource will communicate with an external trading system

provided by XyzTrade.XyzTrade is an external company that provides an order

management system and streaming rates. XyzTrade provides NovoBank with an

API to request rates and perform trades. In practice the XyzTrade API

would be a "black box", but for the purpose of these examples the source

code for the XyzTrade system is provided.The three example applications perform the following roles:

NovoDemoSource is a DataSource application that shows how to

receive requests from peers and send data in response. The application

demonstrates the usage of all three types of Publisher.NovoDemoSink is a DataSource application that shows how

to subscribe to data that is broadcast from another DataSource.NovoChannelSource is a DataSource application that shows how to

perform bi-directional communication with a StreamLink client; for example,

to exchange trade messages.The three example applications all contain an src folder containing

the source code. Source code is also provided for the XyzTrade system.

Each of the example applications is provided as an executable JAR. To run

the application, navigate to the relevant application directory in the examples

folder and execute the JAR within the lib folder. For example:

cd {KIT_ROOT}/examples/demosource

java -jar lib/datasource-java-demosource-{VERSION}.jar

Note that the example folders all contain a conf folder containing

the DataSource configuration file and fields configuration file. In order for

the example applications to successfully start up, you will need to set up and

correctly configure a Caplin Liberator, and then edit the DataSource configuration

file to reference your Liberator installation.

Datasource for Java supports latency chaining both as a latency chain source and as a latency chain link. For more information on latency chaining, see Record latency in record updates.

A latency chain is a way of measuring the latency of any given message as it

passes through connected systems. The message is timestamped at origin.

As the message is transmitted from DataSource to DataSource (and on through the Liberator),

each DataSource records an entry and exit timestamp difference.

This allows the end user to see the latency at each point in the system.

Enable latency chaining by adding the following line to the configuration file:

latency-chain-enable TRUE.

The first DataSource in the chain, should call

RecordMessage.setInitialLatencyChainTime(long)

with the intial timestamp for the latency chain.

The DataSource automatically handles latency chain links, if latency chaining is enabled and the initial latency chain timestamp field is present. The DataSource will then record the entry and exit time for each message.

TOPPlease send bug reports and comments to Caplin support