Failover

Caplin Platform handles client and server failures, and failures in communication between client and server; and between servers. This section provides you with a high level overview of failover within the Caplin Platform. For a look at configurable failover strategies, see Resilience in StreamLink.

Client Failover

If you deploy the Caplin Platform with multiple Liberators, you can configure client applications (using StreamLink) to be aware of the Liberators available.

What does this mean in practice? On first connecting, a client application will choose which Liberator to connect to, based on a configured algorithm.

Typically all instances of Liberators are made available as primary nodes, sometimes referred to as 'live/live' or 'hot/hot', but other scenarios are supported too.

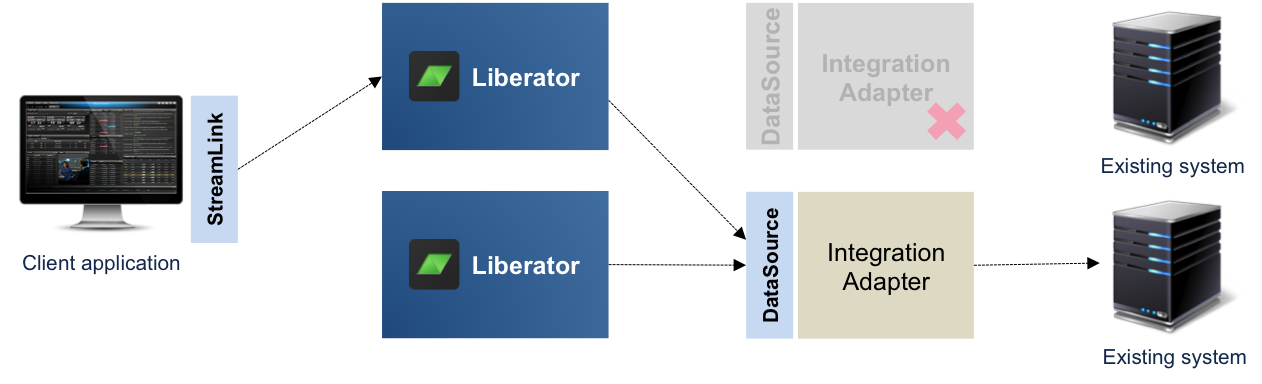

The following diagram shows a typical scenario with a client connected to one Liberator:

If a client application’s connection to its Liberator fails, StreamLink automatically attempts to reconnect to the same Liberator. If that fails, StreamLink uses the configured algorithm to choose another Liberator. The following diagram shows this:

StreamLink takes care of the reconnection logic. When reconnection is in progress, it makes callbacks on the client application to indicate the status of the connection.

The application can then indicate this status to the end-user, or take some other appropriate action.

Server Failover

Typically you will deploy Caplin Platform with a number of instances of Liberator, Transformer, and Integration Adapters. There may be multiple types of Integration Adapter, each type handling different data and/or functionality, and multiple instances of each type.

Liberator, Transformer, and all Integration Adapters are built on the core integration API of the Caplin Integration Suite (the DataSource API), which handles the connections and communication between these components.

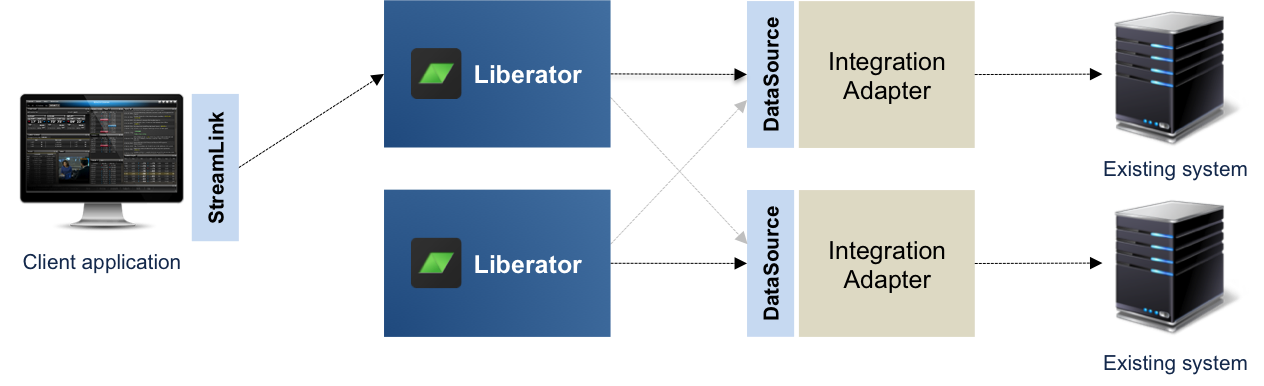

The connection topology and parameters are set up through configuration, and the configuration also defines how failover between these components should work. A number of failover configurations are supported; for example, subscriptions can be balanced across a number of Integration Adapters, or the failover strategy can be determined by the priority of each Adapter.

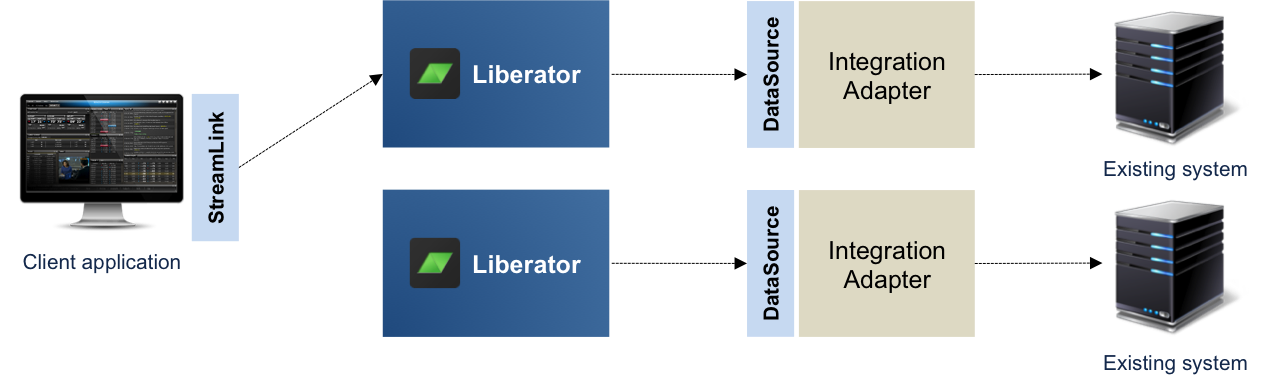

The following diagram shows a typical setup with both Liberator instances connected to both Integration Adapter instances:

When a connection between components fails, it is re-established whenever possible. If failover is required, existing subscriptions to data automatically failover between components. At all times, changes in the status of subscribed data are passed through to client applications so they can be indicated to the end-user (for example "data has become unavailable").

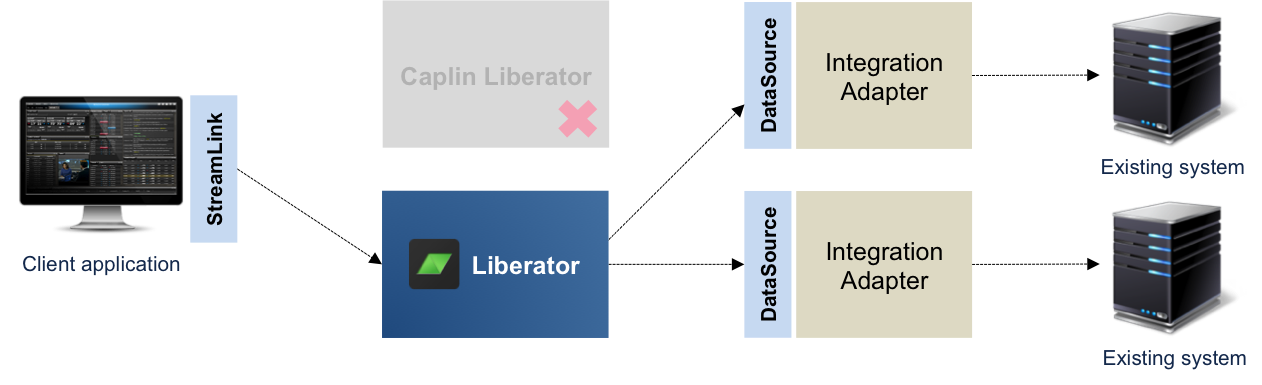

The following diagram shows Liberators using the remaining Integration Adapter instance when the other instance fails: